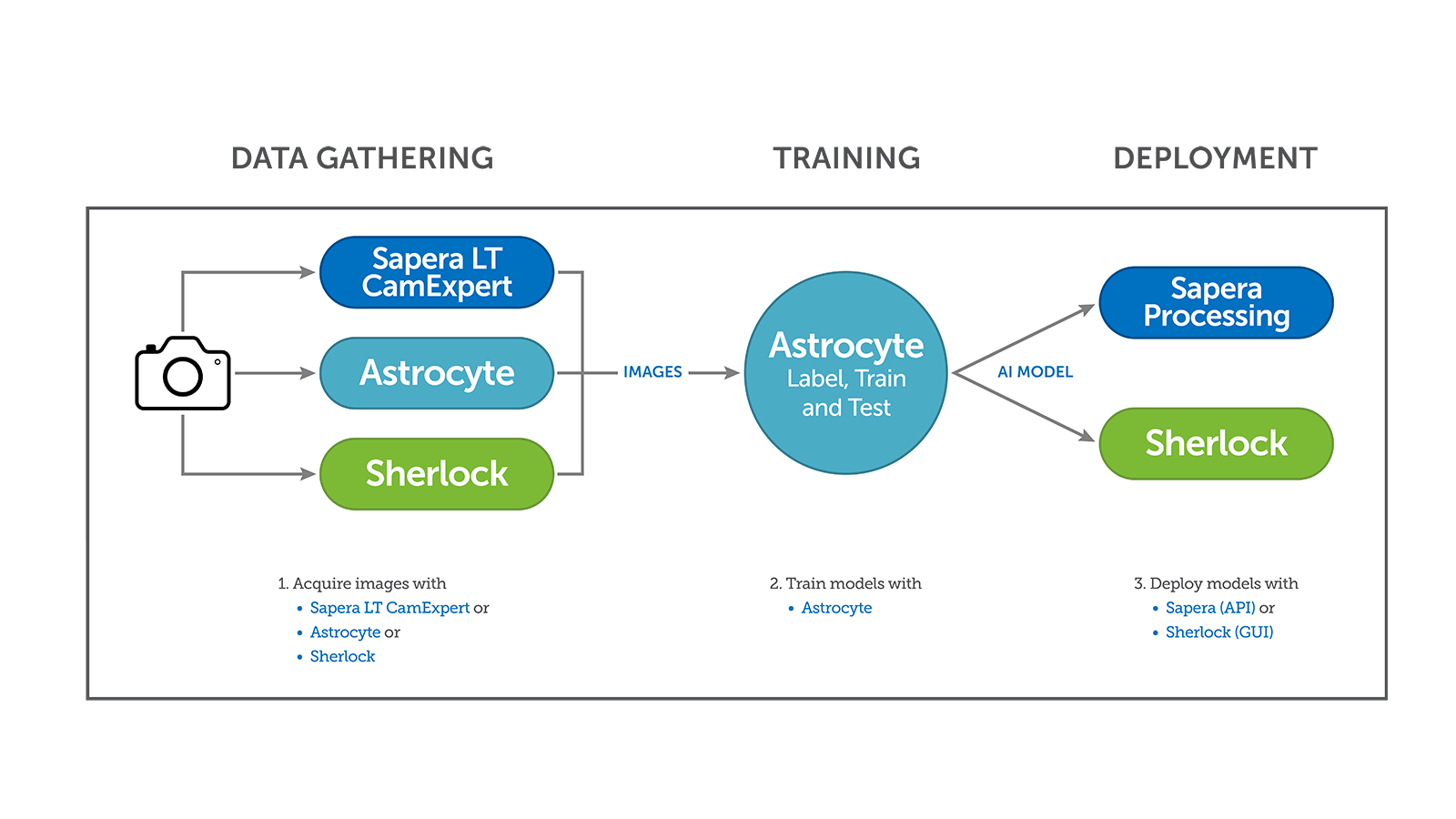

Teledyne DALSA Astrocyte empowers users to harness their own images of products, samples, and defects to train neural networks to perform a variety of tasks such as anomaly detection, classification, object detection, and segmentation. With its highly flexible graphical user interface, Astrocyte allows visualizing and interpreting models for performance/accuracy as well as exporting these models to files that are ready for runtime in Teledyne DALSA Sapera and Sherlock vision software platforms.

Traditional Machine Vision Tools

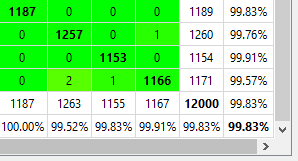

AI Classification Algorithm

Astrocyte to Inspect Shredded Plastic Particles in Recycling Industry

Plastic particles, such as from beverage bottles, undergo a multiple-step recycling and inspection process before they are reused in new plastic products.

Astrocyte is used to locate and classify more than 30 types of materials with high accuracy to ensure high quality material with less than 10 parts per million of contaminants.

Article Originally published in Vision System Design.

Tell Me MoreAstrocyte significantly improves quality, productivity, and efficiency in X-ray medical imaging

The tiny and random nature of fibers on X-ray detectors make them challenging and time consuming for traditional methods or humans.

With Astrocyte, the X-ray solutions team was able to rapidly identify all defects and even outperform their operators.

Article Originally published in Novus Light.

Tell Me More

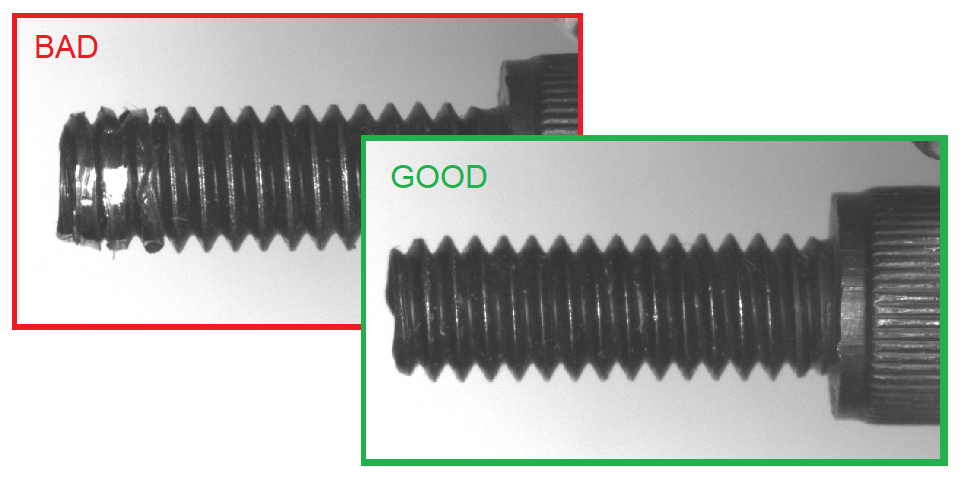

Inspection of screw threads

Classification of good and bad screw tips. Subtle defects on screw threads are detected and classified as bad samples. Astrocyte detects small defects on high resolution images of reflective surface. Just a few tens of samples are required to train a good accuracy model. Classification is used when good and bad samples are available, while Anomaly Detection is used when only good samples are available.

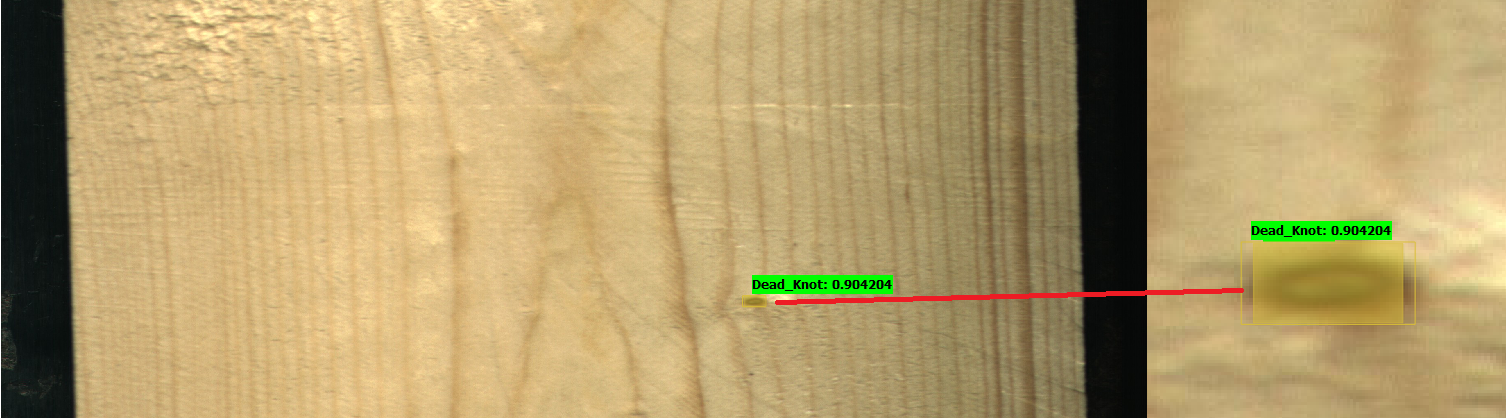

Location/identification of wood knots

Localization and classification of various types of knots in wood planks. Astrocyte can robustly locate and classify small knots 10-pixels wide in high-resolution images of 2800 x 1024 using the tiling mechanism which preserves native resolution.

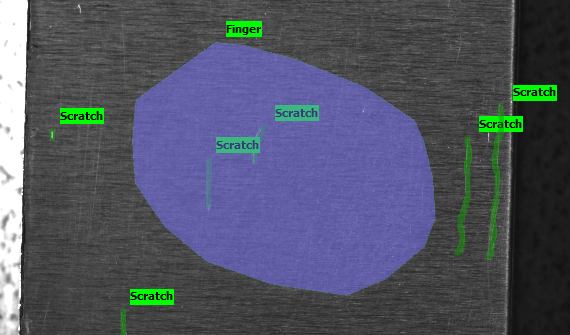

Surface inspection of metal plates

Detection and segmentation of various types of defects (such as scratches and fingerprints) on brushed metal plates. Astrocyte provides output shapes where each pixel is assigned a class. Usage of blob tool on the segmentation output allows performing shape analysis on the defects.

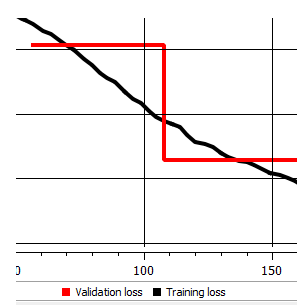

Astrocyte supports the following deep learning architectures.

Description

A generic classifier to identify the class of an image.

Typical Usage

Use in applications where multiple class identification is required. For example, it can be used to identify several classes of defects in industrial inspection. It can train in the field using continual learning.

Description

A binary classifier (good/bad) trained on “good” images only.

Typical Usage

Use in defect inspection where simply finding defects is sufficient (no need to classify defects). Useful on imbalanced datasets where many “good” images and a few “bad” images are available. Does not require manual graphical annotations and very practical on large datasets.

Description

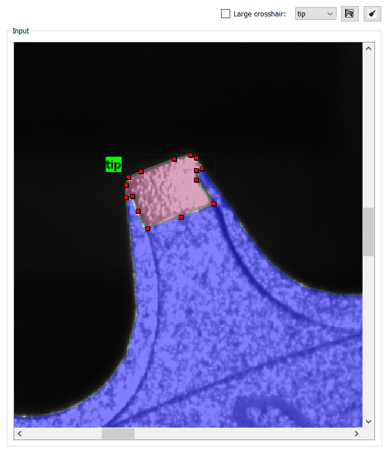

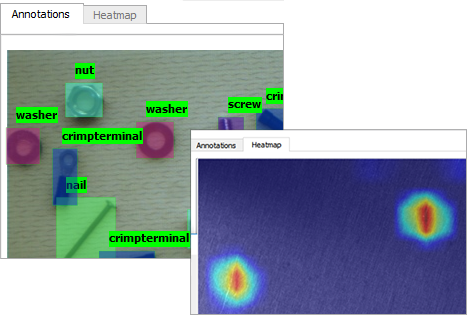

An all-in-one localizer and classifier. Object detection finds the location and the orientation of an object in an image and classifies it.

Typical Usage

Use in applications where the position and orientation of objects is important. For example, it can be used to provide the location and class of defects in industrial inspection.

Description

A pixel-wise classifier. Segmentation associates each image pixel with a class. Connected pixels of the same class create identifiable regions in the image.

Typical Usage

Use in applications where the size and/or shape of objects are required. For example, it can be used to provide location, class, and shape of defects in industrial inspection.

Generating image samples

Importing image samples

Importing/creating annotations (ground truth)

Visualizing/editing/handling dataset

|

MODEL |

INFERENCE TIME (ms) |

|||||||

|

Module |

Dataset |

Image Size |

Input Size |

RTX 3070 |

RTX 3090 |

RTX 4090 |

Intel CPU |

AMD CPU |

|

Anomaly Detection |

Metal |

2592 x 2048 x 1 |

1024 x 1024 x 1 |

21.0 |

13.0 |

9.0 |

275 |

645 |

|

Classification |

Screw |

768 x 512 x 1 |

768 x 512 x 1 |

3.1 |

2.2 |

1.2 |

31.9 |

41.3 |

|

Object Detection |

Hardware |

1228 x 920 x 3 |

512 x 512 x 3 |

3.8 |

3.2 |

3.0 |

31.7 |

46.3 |

|

Segmentation |

Scratches |

2048 x 2048 x 1 |

1024 x 1024 x 1 |

22.3 |

16.6 |

8.9 |

222 |

391 |

|

Module: AI model type |

Intel CPU: Intel Core-i9 12900K @ 3.2GHz |

Sapera Processing (Inference) |

Astrocyte (Training) |

|||

| Operating System | Windows 10 or 11 (64-bit) | Operating System | Windows 10 or 11 (64-bit) | |

| CPU | Intel® Processor w/ EM64T technology | CPU | Intel® Processor w/ EM64T technology Minimum 16GB RAM (32 GB ideal) |

|

| GPU |

(Optional) An NVIDIA GPU for higher speed

|

GPU |

An NVIDIA GPU

|

|